Shocking Cases, Reviewer Rants, Score Dramas, and the True Face of CV Top-tier Peer Review!

“Just got a small heart attack reading the title.”

— u/Intrepid-Essay-3283, Reddit

[image: giphy.gif]

Introduction: ICCV 2025 — Not Just Another Year

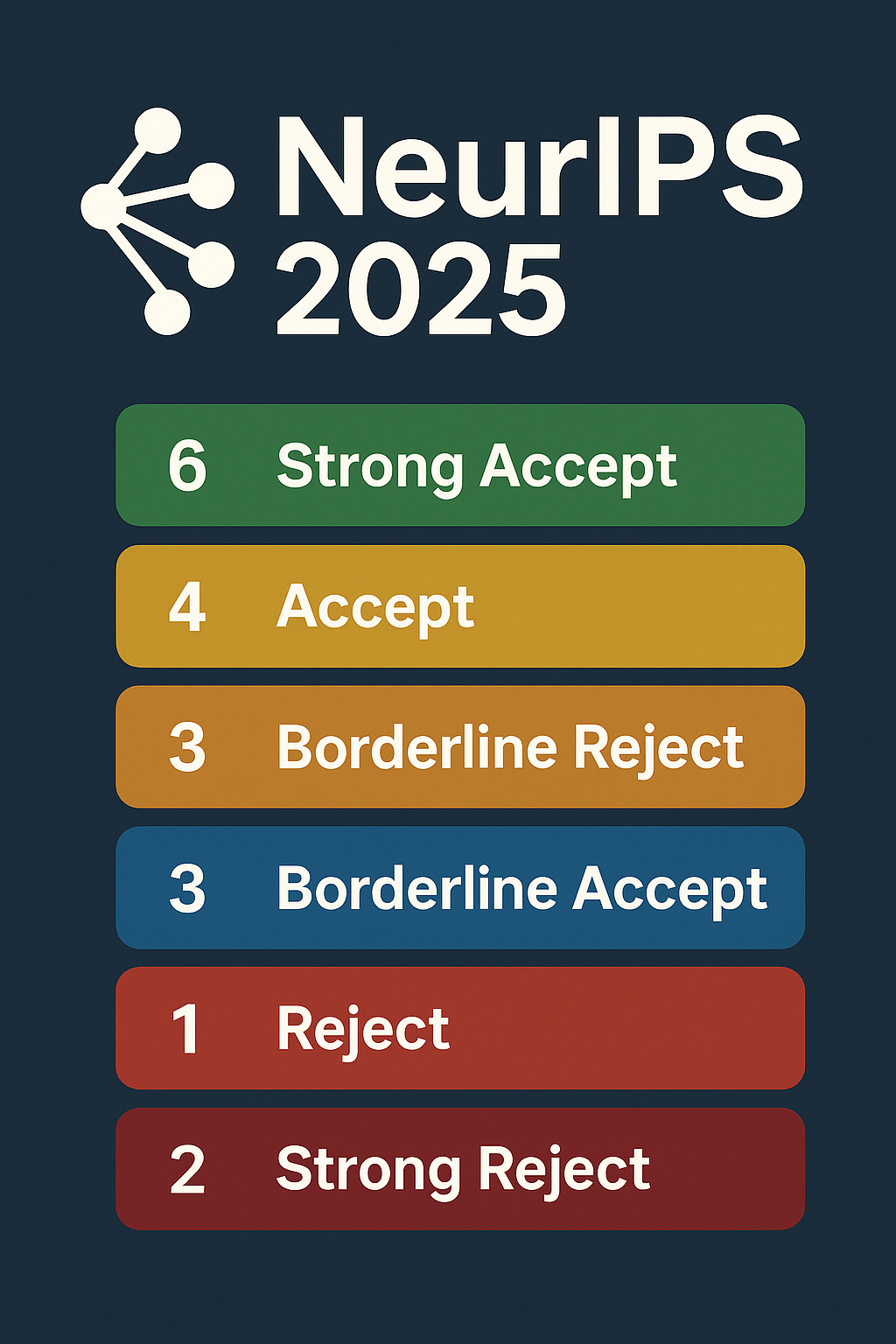

ICCV 2025 might have broken submission records (11,239 papers! 🤯), but what really set this year apart was the open outpouring of review experiences, drama, and critique across communities like Zhihu and Reddit. If you think peer review is just technical feedback, think again. This year, it was a social experiment in bias, randomness, AI-detection accusations, and — sometimes — rare acts of fairness.

Below, we dissect dozens of real cases reported by the community. Expect everything: miracle accepts, heartbreak rejections, reviewer bias, AC heroics, AI accusations, desk rejects, and score manipulation. Plus, we bring you the ultimate summary table — all real, all raw.

The Hall of Fame: ICCV 2025 Real Review Cases

Here’s a complete table of every community case reported above. Each row is a real story. Find your favorite drama!

#

Initial Score

Final Score

Rebuttal Effect

Decision

Reviewer/AC Notes / Notable Points

Source/Comment

1

4/4/2

5/4/4

+1, +2

Accept

AC sided with authors after strong rebuttal

Reddit, ElPelana

2

5/4/4

6/5/4

+1, +1

Reject

Meta-review agreed novelty, but blamed single baseline & "misleading" boldface

Reddit, Sufficient_Ad_4885

3

5/4/4

5/4/4

None

Reject

Several strong scores, still rejected

Reddit, kjunhot

4

5/5/3

6/5/4

+1, +2

Accept

"Should be good" - optimism confirmed!

Reddit, Friendly-Angle-5367

5

4/4/4

4/4/4

None

Accept

"Accept with scores of 4/4/4/4 lol"

Reddit, ParticularWork8424

6

5/5/4

6/5/4

+1

Accept

No info on spotlight/talk/poster

Reddit, Friendly-Angle-5367

7

4/3/2

4/3/3

+1

Accept

AC "saved" the paper!

Reddit, megaton00

8

5/5/4

6/5/4

+1

Accept

(same as #6, poster/talk unknown)

Reddit, Virtual_Plum121

9

5/3/2

4/4/2

mixed

Reject

Rebuttal didn't save it, "incrementality" issue

Reddit, realogog

10

5/4/3

-

-

Accept

Community optimism for "5-4-3 is achievable"

Reddit, felolorocher

11

4/4/2

4/4/3

+1

Accept

AC fought for the paper, luck matters!

Reddit, Few_Refrigerator8308

12

4/3/4

4/4/5

+1

Accept

Lucky with AC

Reddit, Ok-Internet-196

13

5/3/3

4/3/3

-1 (from 5 to 4)

Reject

Reviewer simply wrote "I read the rebuttals and updated my score."

Reddit, chethankodase

14

5/4/1

6/6/1

+1/+2

Reject

"The reviewer had a strong personal bias, but the ACs were not convinced"

Reddit, ted91512

15

5/3/3

6/5/4

+1/+2

Accept

"Accepted, happy ending"

Reddit, ridingabuffalo58

16

6/5/4

6/6/4

+1

Accept

"Accepted but not sure if poster/oral"

Reddit, InstantBuffoonery

17

6/3/2

-

None

Reject

"Strong accept signals" still not enough

Reddit, impatiens-capensis

18

5/5/2

5/5/3

+1

Accept

"Reject was against the principle of our work"

Reddit, SantaSoul

19

6/4/4

6/6/4

+2

Accept

Community support for strong scores

Reddit, curious_mortal

20

4/4/2

6/4/2

+2

Accept

AC considered report about reviewer bias

Reddit, DuranRafid

21

3/4/6

3/4/6

None

Reject

BR reviewer didn't submit final, AC rejected

Reddit, Fluff269

22

355

555

+2

Accept

"Any chance for oral?"

Reddit, Beginning-Youth-6369

23

5/3/2

-

-

TBD

"Had a good rebuttal, let's see!"

Reddit, temporal_guy

24

4/3/4

-

-

TBD

"Waiting for good results!"

Reddit, Ok-Internet-196

25

5/5/4

5/5/4

None

Accept

"555 we fn did it boys"

Reddit, lifex_

26

633

554

-

Accept

"Here we go Hawaii♡"

Reddit, DriveOdd5983

27

554

555

+1

Accept

"Many thanks to AC"

Reddit, GuessAIDoesTheTrick

28

345

545

+2

Accept

"My first Accept!"

Reddit, Fantastic_Bedroom170

29

4/4/2

232

-2, -2

Reject

"Reviewers praised the paper, but still rejected"

Reddit, upthread

30

5/4/4

5/4/4

None

Reject

"Another 5/4/4 reject here!"

Reddit, kjunhot

31

432

432

None

TBD

"432 with hope"

Zhihu, 泡泡鱼

32

444

444

None

Accept

"3 borderline accepts, got in!"

Zhihu, 小月

33

553

555

+2

Accept

"5-score reviewer roasted the 3-score reviewer"

Zhihu, Ealice

34

554

555

+1

Accept

"Highlight downgraded to poster, but happy"

Zhihu, Frank

35

135

245

+1/+2

Reject

"Met a 'bad guy' reviewer"

Zhihu, Frank

36

235

445

+2

Accept

"Congrats co-authors!"

Zhihu, Frank

37

432

432

None

Accept

"AC appreciated explanation, saved the paper"

Zhihu, Feng Qiao

38

442

543

+1/+1

Accept

"After all, got in!"

Zhihu, 结弦

39

441

441

None

TBD

"One reviewer 'writing randomly'"

Zhihu, ppphhhttt

40

4/4/3/2

-

-

TBD

"Asked to use more datasets for generalization"

Zhihu, 随机

41

446 (443)

-

-

TBD

"Everyone changed scores last two days"

Zhihu, 877129391241

42

553

553

None

Accept

"Thanks AC for acceptance"

Zhihu, Ealice

43

4/4/3/2

-

-

Accept

"First-time submission, fair attack points"

Zhihu, 张读白

44

4/4/4

4/4/4

None

Accept

"Confident, hoping for luck"

Zhihu, hellobug

45

5541

-

-

TBD

"Accused of copying concurrent work"

Zhihu, 凪·云抹烟霞

46

554

555

+1

Accept

"Poster, but AC downgraded highlight"

Zhihu, Frank

47

6/3/2

-

None

Reject

High initial, still rejected

Reddit, impatiens-capensis

48

432

432

None

Accept

"Average final 4, some hope"

Zhihu, 泡泡鱼

49

563

564

+1

Accept

"Grateful to AC!"

Zhihu, 夏影

50

6/5/4

6/6/4

+1

Accept

"Accepted, not sure if poster or oral"

Reddit, InstantBuffoonery

NOTE:

This is NOT an exhaustive list of all ICCV 2025 papers, but every real individual case reported in the Zhihu and Reddit community discussions included above.

Many entries were “update pending” at posting — when the author didn’t share the final result, marked as TBD.

Many papers changed hands between accept/reject on details like one reviewer not updating, AC/Meta reviewer overrides, “bad guy”/mean reviewers, and luck with batch cutoff.

🧠 ICCV 2025 Review Insights: What Did We Learn?

1. Luck Matters — Sometimes More Than Merit

Multiple papers with 5/5/3 or even 6/5/4 were rejected. Others with one weak reject (2) got in — sometimes only because the AC “fought for it.”

"Getting lucky with the reviewers is almost as important as the quality of the paper itself." (Reddit)

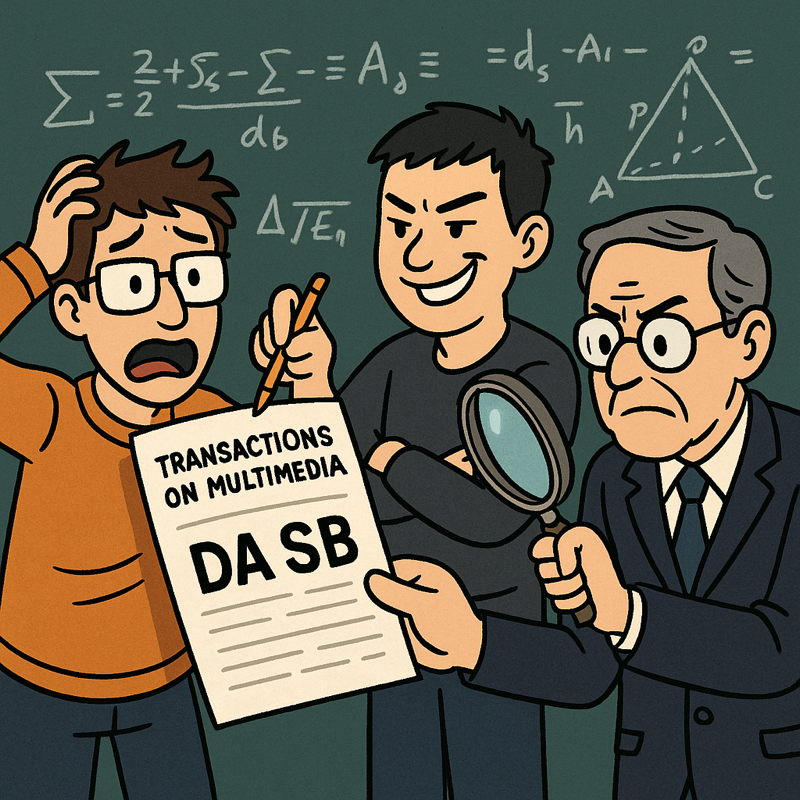

2. Reviewer Quality Is All Over the Place

Dozens reported short, generic, or careless reviews — sometimes 1-2 lines with major negative impact.

Multiple people accused reviewers of being AI-generated (GPT/Claude/etc.) — several ran AI detectors and reported >90% “AI-written.”

Desk rejects were sometimes triggered by reviewer irresponsibility (ICCV officially desk-rejected 29 papers for "irresponsible" reviewers).

3. Rebuttal Can Save You… Sometimes

Many cases where good rebuttals led to score increases and acceptance.

But also numerous stories where reviewers didn’t update, or even lowered scores post-rebuttal without clear reason.

4. Meta-Reviewers & ACs Wield Real Power

Several stories where ACs overruled reviewers (for both acceptance and rejection).

Meta-reviewer “mistakes” (e.g., recommend accept but click reject) — some authors appealed and got the result changed.

5. System Flaws and Community Frustrations

Complaints about the “review lottery”, irresponsible/underqualified reviewers, ACs ignoring rebuttal, and unfixable errors.

Many hope for peer review reform: more double-blind accountability, reviewer rating, and even rewards for good reviewing (see this arXiv paper proposing reform).

Community Quotes & Highlights

"Now I believe in luck, not just science."

— Anonymous

"Desk reject just before notification, it's a heartbreaker."

— 877129391241, Zhihu

"I got 555, we did it boys."

— lifex, Reddit

"Three ACs gave Accept, but it was still rejected — I have no words."

— 寄寄子, Zhihu

"Training loss increases inference time — is this GPT reviewing?"

— Knight, Zhihu

"Meta-review: Accept. Final Decision: Reject. Reached out, they fixed it."

— fall22_cs_throwaway, Reddit

Final Thoughts: Is ICCV Peer Review Broken?

ICCV 2025 gave us a microcosm of everything good and bad about large-scale peer review: scientific excellence, reviewer burnout, human bias, reviewer heroism, and plenty of randomness.

Takeaways:

Prepare your best work, but steel yourself for randomness.

Test early on https://review.cspaper.org before and after submission to help build reasonable expectation

Craft a strong, detailed rebuttal — sometimes it works miracles.

If you sense real injustice, appeal or contact your AC, but don’t count on it.

Above all: Don’t take a single decision as a final judgment of your science, your skill, or your future.

Join the Conversation!

What was YOUR ICCV 2025 review experience?

Did you spot AI-generated reviews? Did a miracle rebuttal save your work?

Is the peer review crisis fixable, or are we doomed to reviewer roulette forever?

“Always hoping for the best! But worse case scenario, one can go for a Workshop with a Proceedings Track!”

— Reddit

[image: peerreview-nickkim.jpg]

Let’s keep pushing for better science — and a better system.

If you find this article helpful, insightful, or just painfully relatable, upvote and share with your fellow researchers. The struggle is real, and you are not alone!

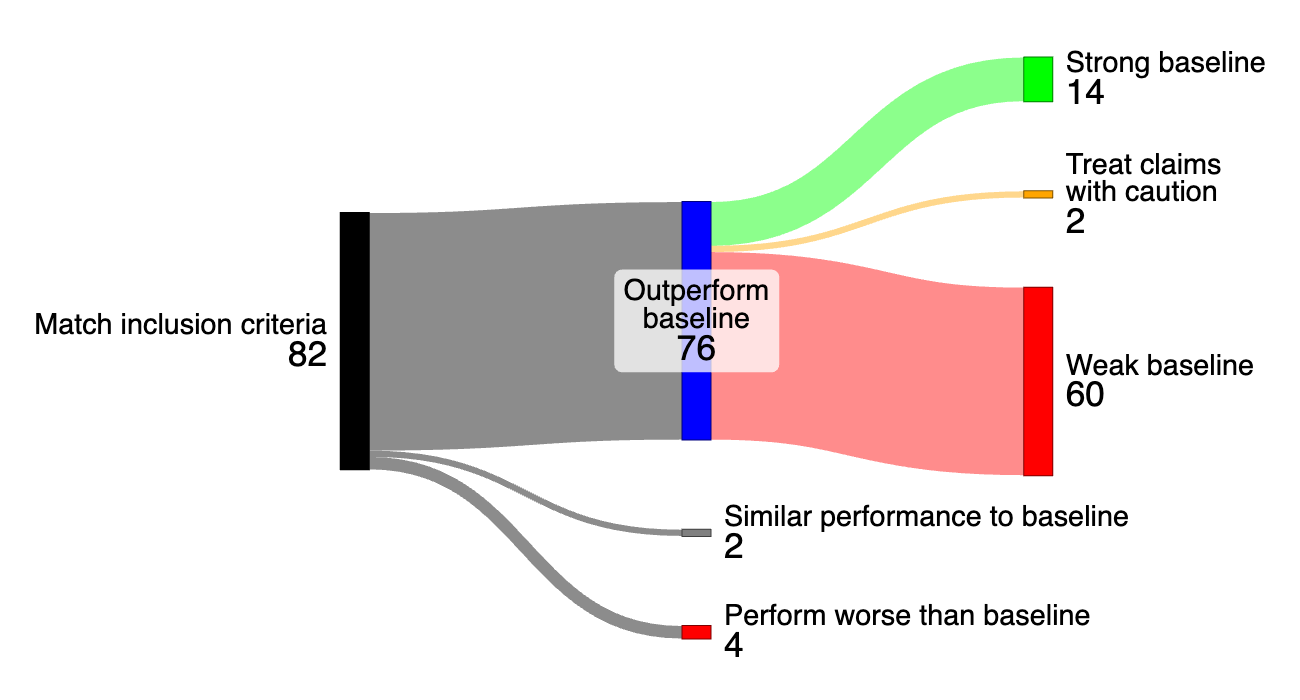

As a reviewer for the NeurIPS 2025 Datasets & Benchmarks (D&B) track, I’ve been working through my assigned submissions — and, as many of you may relate to, my inbox has been buzzing with notifications from the program chairs. While reviewing remains a thoughtful, human-driven task, this year’s workflow includes a few important upgrades that are worth sharing, especially for researchers who care deeply about the transparency, reproducibility, and ethics of peer review.

Here’s a quick behind-the-scenes look at how the process works in 2025 and how it differs from previous years.

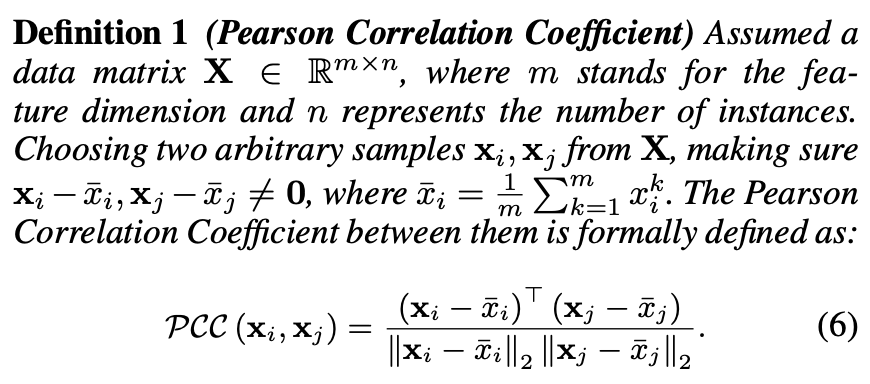

Automatic Dataset Reports: A New Gatekeeping Assistant

One of the most noticeable improvements this year is the automatic generation of a Dataset Reviewer Report for each submission that includes a dataset. This report is not a replacement for human judgment, but rather a helpful tool to assist reviewers in evaluating dataset accessibility, structure, and metadata completeness.

This report is based on a metadata format called Croissant, and it checks:

Whether the dataset URLs actually work

If the dataset files can be downloaded and accessed

If a valid license and documentation are included

Whether basic ethical and Responsible AI (RAI) information is present ️

Think of this as a checklist that helps filter out incomplete or misleading submissions early on — without you needing to spend your first 30 minutes chasing broken links.

You also get Python code snippets auto-generated in the report to help you load and explore the dataset directly from platforms like Kaggle, Hugging Face, or Dataverse. It’s a small touch, but really reduces friction during the review.

️ Responsible Reviewing Is Now Mandatory (Not Just Encouraged)

The Responsible Reviewing Initiative is not new, but it’s more strictly enforced this year. Reviewers are now expected to look for the following in each dataset paper:

Is the dataset publicly available and reproducible?

Are ethical considerations and data limitations addressed?

Are RAI fields (like bias, demographic info, or collection methods) present or at least acknowledged?

Is the licensing and permission status clear?

These were optional or lightly emphasized in previous years, but they now carry real weight in your evaluation — especially for a track that centers on datasets and benchmarks.

If a dataset claims to be open but is inaccessible, lacks a license, or ignores potential bias or harm, reviewers are encouraged to flag this as a major concern.

️ Review Process Reminders

Here are a few reminders for reviewers in 2025:

Don't use LLMs to process or summarize submissions — per NeurIPS’s LLM usage policy, reviewing is strictly human-only.

Be proactive in checking for conflicts of interest. Not all COIs are perfectly detected by the system.

Every submission matters — even if the topic is outside your direct interests, you’re expected to review it unless there’s a serious reason you cannot (in which case, contact your Area Chair).

Watch your assignment list — more papers may get added during the review period.

What’s Better Compared to Last Year?

Feature

2024

2025

Dataset Accessibility Check

Manual by reviewer

Auto-checked by metadata report

Responsible AI Metadata

Encouraged

️ Now explicitly reviewed

Review Support Tools

Basic

🧰 Code snippets and report summaries

Licensing and Ethics

Optional in many cases

More formally required

LLM Policy

Vague enforcement

Strict ban on use in reviews

🧠 Takeaways for Researchers and Reviewers

The D&B track is evolving to match the increasing complexity of data-driven research. If you’re a researcher, this means submitting your dataset now requires more than just a ZIP file on Google Drive — it needs structure, documentation, and ethical awareness.

If you’re a reviewer, you now have better tools to assess those aspects — but also more responsibility to do so thoughtfully.

All of this helps build a stronger, more reproducible research ecosystem, and makes dataset contributions as robust as model papers.

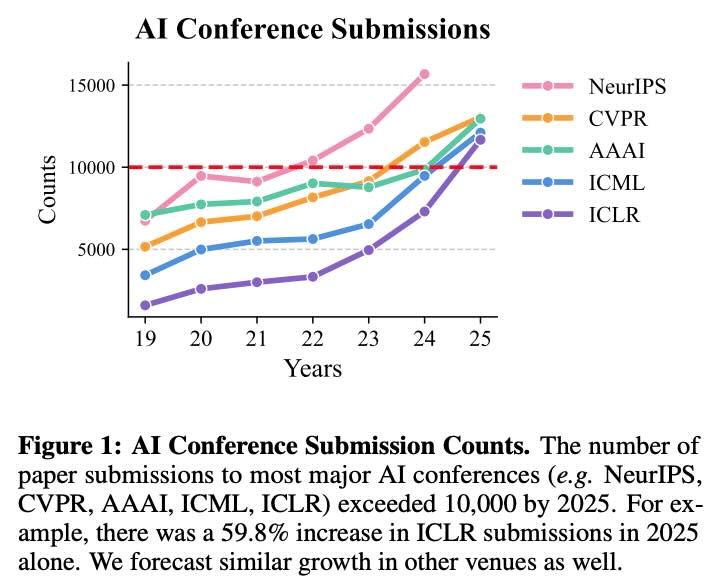

May 14, 2025 – Overleaf, the beloved online LaTeX editor, gave researchers a scare by going down on the eve of the NeurIPS 2025 deadline. Many users reported 502 server errors and sluggish loading at the worst possible time – just hours before the Anywhere on Earth cutoff for submissions. Despite the frantic late-night editing by thousands of authors, Overleaf’s official status showed “no incidents” by the time this article is put online. The timing was no coincidence; with the NeurIPS 2025 full paper deadline on May 15 AoE, Overleaf’s servers likely buckled under a surge of last-minute activity. (If you refreshed Overleaf in panic only to see an error, you were most definitely not alone – outage trackers noted hundreds of users affected during the peak rush.)

[image: image.png.e3b70e14e91cf1d7e01a8c6632b21f10.png]

Déjà Vu at AI Conference Deadlines

Regular users joked that “Overleaf down before a deadline” is practically an academic tradition. Indeed, this isn’t the first time Overleaf has faltered during a major AI conference crunch. Last year, as the NeurIPS 2024 deadline approached, one observer quipped on Hacker News: “Overleaf down. If you have a paper deadline then my condolences.” Such tongue-in-cheek sympathy reflects a common experience – each season of deadlines, someone, somewhere is posting the same lament. Overleaf even suffered a notable outage in Dec 2024, unrelated to a deadline, with its team publicly acknowledging the downtime. By now, many researchers half-expect a bit of chaos whenever big submission dates roll around.

It’s not just Overleaf. OpenReview – the platform hosting paper submissions and reviews for conferences like NeurIPS, ICLR, and ICML – has also strained under deadline pressure. With thousands of authors trying to upload PDFs at once, slowdowns are routine and occasional outages have forced organizers to improvise. In some cases, deadlines even got extended by 48 hours due to technical outages (much to the relief of procrastinators everywhere!). Conference organizers constantly urge authors not to wait until the last minute, but the last-minute rush has a gravity of its own. When submission portals crawl and Overleaf won’t load, it truly feels like a rite of passage in AI research .

The Incredible Submission Surge

Why does this keep happening? Simply put, AI conference submissions have exploded in recent years, putting tremendous load on tools and sites during deadlines. For context, NeurIPS 2023 saw an “astonishing” 12,345 submissions, and that number jumped to 15,671 submissions in 2024 – all vying for a spot at the premier AI conference. Other conferences aren’t far behind: ICLR 2024 received 7,262 papers and ICML 2024 nearly 9,500. These massive waves of papers translate to tens of thousands of researchers scrambling to polish manuscripts, compile LaTeX, and upload files in the final 24 hours before the deadline. Overleaf, being the go-to collaborative editor, experiences sky-high traffic at these moments – multiple people per paper rapidly editing and recompiling documents. It’s a perfect (brain)storm: heavy server load, anxious users, and a hard countdown timer. No wonder the system occasionally cries uncle!

The strain on OpenReview (and other submission sites) is equally intense. NeurIPS and ICLR now require authors to have OpenReview profiles, and the submission system must ingest thousands of PDF uploads around the deadline hour. In peak times, OpenReview has been known to respond sluggishly or intermittently fail as everyone tries to hit “Submit” simultaneously. It’s practically an arms race: as machine learning grows, so do the submission counts – and both Overleaf and OpenReview are racing to scale up fast enough.

Community Reactions: Frustration & Humor

When Overleaf went down this time, the research community’s reactions ranged from despair to dark humor. On Reddit, users vented their anxiety: “The deadline... is within 48 hours, and I don’t have a local backup!” one panicked, watching the editor fail to load. Others chimed in about seeing “Bad Gateway” errors and worried about losing precious work. (Pro tip: many vowed never to trust cloud editing alone again – Overleaf does offer Git integration for local backups, which suddenly seemed like a lifesaver!). The sight of academics collectively screaming “WHY NOW, OVERLEAF?! ” has practically become a meme of its own.

Meanwhile, Twitter and other socials lit up with the hashtag #OverleafDown, as both complaints and jokes poured in. Some exhausted authors were genuinely upset, while others tried to lighten the mood. In one academic meme forum, a top-voted post urged frazzled grad students to “go touch some grass, Overleaf is down” – a humorous reminder to take a break (since there was nothing else to do until the site came back). On Mastodon, a researcher wryly declared, “Looks like Overleaf is down. It’s a conspiracy to stop me from working on this manuscript!” These tongue-in-cheek responses show how communal the experience has become: everyone recognizes the mix of panic and comedy that accompanies a deadline outage. Misery loves company, and in these moments the AI research community certainly comes together – if only to share a collective facepalm.

The (Deadline) Show Must Go On

Thankfully, the Overleaf outage on May 14 was resolved in time, and authors could resume editing and submit their papers (likely with a big sigh of relief). By the submission deadline, all was back online, and NeurIPS 2025 papers were safely in. Still, this episode highlights the annual drama that unfolds behind groundbreaking AI papers: not only are researchers pushing the frontiers of science, they’re also pushing the limits of online services!

In the end, the submission rush has become an integral part of conference culture – equal parts stressful and oddly camaraderie-building. Overleaf and OpenReview outages are the modern-day “battle scars” of academia, stories to be recounted over coffee after the deadline. As AI conferences continue to grow, perhaps these platforms will scale up too (one can hope!). Until then, remember to keep calm, back up your files, and maybe keep an offline LaTeX install handy. And if all else fails, there’s always that sage advice: take a deep breath, step outside for a moment – the paper chase will still be here when you return.

👋

Welcome! Feel free to

register (verified or anonymous)

and share your thoughts or story — your voice matters here! 🗣️💬

We will soon release our AI-powered paper review tool for CS conferences — fast, targeted insights to boost the acceptance odds.

We will soon release our AI-powered paper review tool for CS conferences — fast, targeted insights to boost the acceptance odds.

-

-

Peer Review in Computer Science: good, bad & broken

Discuss everything about peer review in computer science research: its successes, failures, and the challenges in between.

-

Anonymous Sharing & Supplementary Materials

Anonymously share data, results, or materials. Useful for rebuttals, blind submissions and more. Only unverified users can post (and edit or delete anytime afterwards).